A short survey of the history of what is known as ‘sensory substitution’:

The purest form of vision through electricity is described by Helmholtz in his “Handbuch der Physiologischen Optik”, L. Voss, Leipzig, 1867:

“Le Roy liess den Entladungsschlag auf einen am Staar erblindeten jungen Mann wirken, indem er dessen Kopf und rechtes Bein mit einem Messingdrathe umwand und durch die Enden der Dräthe eine Leydener Flasche entlud. Bei jeder Entladung glaubte der Patient eine Flamme sehr schnell von oben nach unten vorbeigehen zu sehen, und hörte einen Knall wie von grobem Geschütze. Wenn Le Roy den Schlag durch den Kopf des Blinden allein leitete, indem er über den Augen und am Hinterkopfe Metallplatten befestigte, die mit den Belegungen einer Flasche verbunden wurden, so sah der Kranke Phantasmen, einzelne Personen, in Reihe gestellte Volkshaufen u. s. w.”

in english:

“Le Roy passed the discharge through a young man who was blind from a cataract. His head and right leg were bound with brass wire, and a Leyden jar discharged through its ends. At every discharge the patient thought he saw a flame pass rapidly downwards from above, accompanied by a noise like heavy artillery. When the shock was made to pass through the blind man’s head alone, by fixing metal plates over the eyes and on the back of the head, connected with the jar, the patient had sensations of phantastic figures, individual persons, crowds of people in lines, etc.”

In 1966 Leslie Kay invented a sonar system as an aid to the blind. It is here described by T.G.R.Bower as used in a classic experiment from 1974:

” In California, in 1974, I had the opportunity to test the hypothesis. It was explained to the parents of a 16-week-old blind baby, who were willing to take the risks involved. The New Zealand Company, Wormald Vigilant, supplied an echolocation device free of charge. The device that provided the babies in these experiments with sound information about their environment is a modification of one invented by professor Leslie Kay now at Canterbury University in New Zealand. Telesensory Systems of California agreed to modify it free of charge.

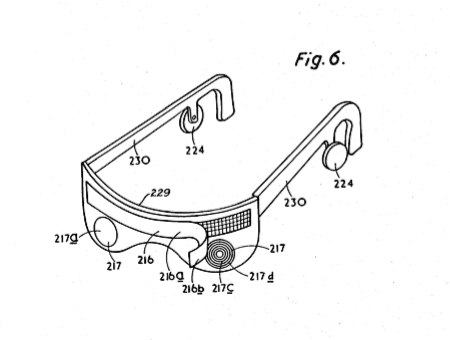

After extensive study of the bat’s ultrasonic echolocation system Professor Kay devised a pair of spectacles for blind adults incorporating an ultrasonic aid. The aid continuously irradiates the environment with ultrasound and converts reflections from objects into audible sound. Ultrasound was chosen because of the size of an object that will produce an echo is inversely proportional to the frequency of the sound source. Ultrasound will thus generate echoes from smaller objects than will audible sound. The conversion from ultrasound to audible sound codes the echo in three ways. The pitch of the audible signal is arranged to indicate the distance of the object from which the echo came – high pitch means distant objects, low pitch near ones. The amplitude of the signal codes for the size of the irradiated object (loud-large, soft-small) and texture of the object is represented by clarity of the signal. In addition, the audible signal is ‘stereo’ so direction to the object is perceived by the difference in time of arrival of a signal at the two ears.

In many ways the first session was the most exciting of all. An object was introduced and moved slowly to and from the baby’s face, close enough to tap the baby on the nose. On the fourth presentation we noticed convergence movements of the eyes. These were not well controlled, but the baby was converging as the object approached and diverging as the object receded. On the seventh presentation the baby interposed his hands between face and object. This behaviour was repeated several times. Then he was presented with objects moving to right and left; he tracked them with head and eyes and swiped at the objects. The smallest object presented was a 1 cm cube dangling on the end of a wire, which the baby succeeded in hitting four times.

The mother stood the baby on her knee at arms length, chatted to him, telling him what a clever boy he was, and so on. The baby was facing her and wearing the device. He slowly turned his head to remove her from the sound field, then slowly turned back to bring her in again. This behaviour was repeated several times to the accompaniment of immense smiles from the baby. All three observers had the impression that he was playing a kind of peek-a-boo with his mother, and deriving immense pleasure from it.

The baby’s development after these initial adventures remained more or less on a par with that of a sighted baby. using the sonic guide the baby seemed able to identify a favourite toy without touching it. He began two-handed reaches around 6 months of age. By 8 months the baby would search for an object that had been hidden behind another object. At 9 months he demonstrated ‘placing’ on a table edge and a pair of batons. None of these behaviour patterns is normally seen in congenitally blind babies. More important, so far as I know, no blind adult has been able to learn such skills with the guide.”

(from T.G.R.Bower, “Perceptual Development: Object and Space”, in E.C.Carterette, M.P.Friedman (eds), “Handbook of Perception, Volume VIII, Perceptual Coding”, Academic Press, 1978.)

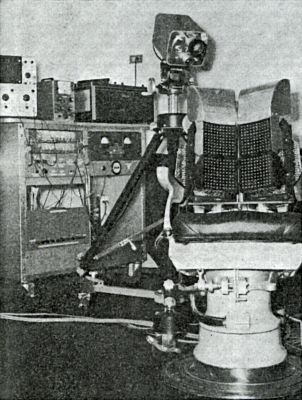

dental chair with tactile display (Bach-y-Rita, 1969)

Paul Bach-y-Rita seems to have independently begun this line of research with his experiments from 1969, in which he trained blind people to recognize images presented to them through a tactile display on their back:

“Four hundred solenoid stimulators are arranged in a twenty x twenty array built into a dental chair. The stimulators, spaced 12mm apart, have 1 mm diameter ‘Teflon’ tips which vibrate against the skin of the back (Fig. 1). Their on-off activity can be monitored visually on an oscilloscope as a two-dimensional pictorial display (Fig. 2). The subject manipulates a television camera mounted on a tripod, which scans objects placed on a table in front of him. Stimuli can also be presented on a back-lit screen by slide or motion picture projection. The subject can aim the camera, equipped with a zoom lens, at different parts of the room, locating and identifying objects or persons.”

“With repeated presentations, the latency of time-to-recognition of these objects falls markedly; in the process the students discover visual concepts such as perspective, shadows, shape distortion as a function of viewpoint, and apparent change in size as a function of distance.”

(quotes and first two pictures from P.Bach-Y-Rita, “Vision Substitution by Tactile Image Projection”, Nature vol. 221, 1969, pp. 963-964.)

image as sent to tactile display (Bach-y-Rita, 1969)

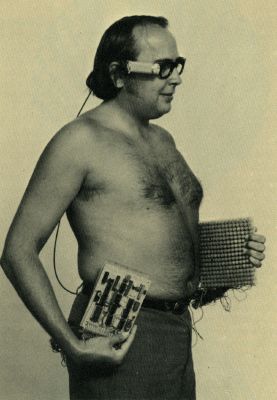

a later, mobile system by Paul Bach-y-Rita (1970)

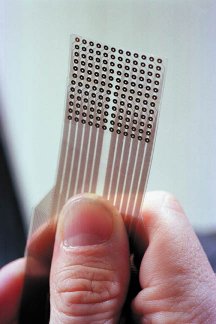

The work of Bach-y-Rita has been continued by Kurt Kaczmarek at the University of Wisconsin, and Maurice Ptito at the University of Montreal. Below some images of the system developed in Wisconsin, which uses a grid of 144 electrodes on the tongue. There’s a great item about it at the BBC.

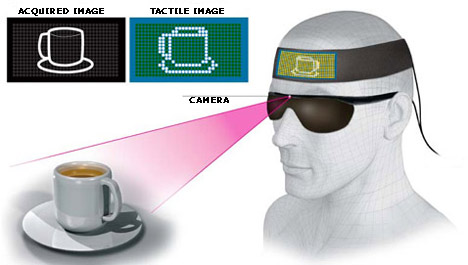

In Japan, Yonezo Kanno, and Dr. Susumu Tachi have developed the Forehead Retina System along similar lines. It uses a grid of electrodes on the forehead and it is supposed to work in colour (!):

And last but not least Peter Meijer, who since 1991 has been developing “The Voice“, a system that maps black and white camera images to stereo sound. He also has a great page with links on the subject of sensory substitution.